Prof. Christian Dietrich receives the Wissenschaftspreis Hannover 2020 (Hanover Science Award, endowed with 4.000 EUR) for his excellent dissertation Interaction-Aware Analysis and Optimization of Real-Time Application and Operating System. Prof. Volker Epping, President of the University and Deputy Chairman of the University Society, awarded the distinctions to the early-career scientists. Words of greeting were also spoken by Falko Mohrs, Lower Saxony Minister for Science and Culture, as well as Dirk von der Crone, member of the Executive Board of the cooperating partner SwissLife Germany.

In his laudatory speech, Prof. Daniel Lohmann praised Christian's rapid academic career, which he had the pleasure of accompanying from the student assistant in the first semester to a full professorship.

Congrats, Christian!

Abstract: In many industrial sectors, device manufacturers are moving away from expensive special-purpose hardware units and consolidate their systems on commodity hardware. As part of this change, developers are enabled to run their applications on general-purpose operating systems like Linux, which already supports thousands of different devices out of the box and can be used in a wide range of target scenarios. Furthermore, the Linux ecosystem allows them to integrate existing implementations of standard functionality in the form of shared libraries.

However, as the libraries and the Linux kernel are designed as generic building blocks in order to support as many applications as possible, they cannot make assumptions about specific use cases for a single-purpose device. This generality leads to unnecessary overheads in narrowly defined target scenarios, as unneeded components do not only take up space on the target system but have to be maintained over the lifetime of the device as well. While the Linux kernel provides a configuration system to disable unneeded functionality like device drivers, determining the required features from over 16 000 options is an infeasible task. Even worse, most shared libraries cannot be customized even though only around 10 percent of their functions are ever used by applications.

In this thesis, I present my approaches for the automated identification and removal of unnecessary components in all layers of the software stack. As the configuration system is an integral part of the Linux kernel, we embrace its presence and automatically generate custom-fitted configurations for observed target scenarios with the help of an extracted variability model. For the much more diverse realm of shared libraries, with different programming languages, build systems, and a lack of configurability, I demonstrate a different approach. By identifying individual functions as logically distinct units, we construct a symbol-level dependency graph across the applications and all their required libraries. We then remove unneeded code at the binary level and rearrange the remaining parts to take up minimal space in the binary file by formulating their placement as an optimization problem. To lower the number of unnecessary updates to unused components in a deployed system, I lastly present an automated method to determine the impact of software changes on a target scenario and provide guidance for developers on whether they need to update their systems.

Applying these techniques to different target systems, I demonstrate that we can disable up to 87 percent of configuration options in a DEBIAN Linux kernel, shrink the size of an embedded OPENWRT kernel by 59 percent, and speed up the boot process of the embedded system by 21 percent. As part of the shared library tailoring process, we can remove 13 060 functions from all libraries in OPENWRT and reduce their total size by 31 percent. In the MEMCACHED Docker container, we identify 381 entirely unneeded shared libraries and shrink the container image size by 82 percent. An analysis of the development history of two large library projects over the course of more than two years further shows that between 68 and 82 percent of all changes are not required for an OPENWRT appliance, reducing the number of patch days by up to 69 percent.

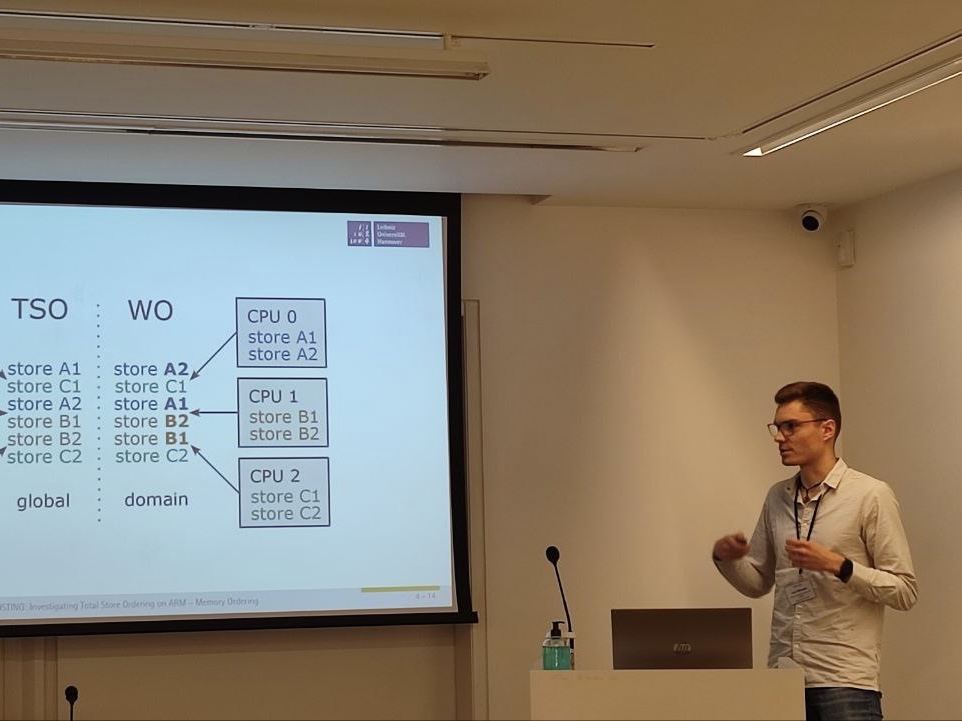

Lars Wrenger presents our paper TOSTING: Investigating Total Store Ordering on ARM at the 36th GI/ITG International Conference on Architecture of Computing Systems (ARCS '23) in Athens, Greece. In the paper, we analyse the performance impact of the Intel Total Store Ordering (TSO) memory model in comparison to ARM's weak memory ordering model. For this, we exploit the fact that Apple has implemented TSO on its M1 processors for the Rosetta compatibility layer. TOSTING is related to the ParPerOS project.

For the work, we got a best paper award!. Congrats, Lars!

Abstract: Embedded systems are an omnipresent part of our daily life. They are ubiquitously present in almost every moment to support and secure our activity. At the same time, we expect these systems to be both highly cost-efficient in development and production. Without restrictions, we expect them to work reliably and always respond timely and as expected. This leads to an immense pressure on the development process of new systems, especially with the large number of units and the further increasing occurrence of these systems.

A finished system has a defined task according to its environment and thus a defined software application that it executes. For the tools used to implement and execute it, this is not the case. Those tools are not specifically designed for that exact task, but for a variety of possible applications. They provide a wide range of functionality and flexibility, and, hence, allow a wide usage spectrum. In this thesis, I focus on the real-time operating system which serves as the base software layer to execute the designated application. Such an operating system provides a wide range of abstractions, system object classes, and associated interaction methods, of which an actual application implementation uses only a subset. Using dynamically configured systems, which I consider in this thesis, enforce to instantiate and configure all system objects and their interworking during runtime by code, exclusively. As a result, an operating system needs to be able to accept every system call at every point in time, even if not issued by the actual application. This flexibility causes pessimistic assumptions for possibly never appearing interaction patterns and forces dynamical management of system objects and state.

To solve this problem when not needed by the application, I present methods to systematically and automatically specialize formerly dynamic system calls statically. While considering the require- ments of a given application, these methods improve the non-functional properties of the resulting specialized system without changing the functional properties. Using static analyses, I determine the system objects forming the application structure and their possible interactions. Backed by this knowledge, I apply static specializations on both, the startup and the working phase of the application during compile time. At the startup phase, I apply static system-object instantiation by transforming the effects of the system calls into compile-time constants. To improve the working phase, I statically exchange the generic implementations of system objects and their interaction system calls by a version suited for the actual usage patterns. With these specializations, I am able to reduce both runtime and memory requirements of a specialized system. I can speed up system startup by up to 67%. During working phase, an execution time reduction by up to 43 % for a single system call is achievable.

With this thesis, I show that an automatic application-aware static specialization of formerly dynamic system calls is both feasible and beneficial. I am able to precompute the effects of dynamic system calls during compile time, and, thus, reduce both run-time overhead and memory requirements, while removing unused system-call implementations. By using system call implementations specialized to the actual application, I reduce superfluous administrative overhead, and, hence, reduce runtime overhead even further. This specialization takes place without any disturbance for application parts, truly relying on the dynamic operating system interface, as all specializations are applied in a non-breaking manner. This results in a continuous transition between dynamically and statically configured systems, improving the system performance by only removing superfluous flexibility without ever violating functional properties.

Lars Wrenger receives the award for best master thesis in the field of operating systems. The award is granted annually by the SIG on Operating Systems of the German Computer Association (GI Fachgruppe Betriebssysteme) solely on the base of scientific excellence. It includes a price money of 500 €. Congrats, Lars!

In OS memory management, the page-frame allocator is the most fundamental component, as it manages the physical memory. In his thesis Lo(ck|g)-free Page Allocator for Non-Volatile Memory in the Linux Kernel Lars designs, implements and evaluates a new highly scalable page-frame allocator for volatile and nonvolatile memories. This excellent work is now continued within the ParPerOS project.

Tobias Landsberg presents our paper TASTING: Reuse Test-case Execution by Global AST Hashing at the 17th International Conference on Sofware Technologies (ICSOFT '22) in Lisbon. In the paper we describe TASTING, an approach for efficiently selecting and reusing regression-test executions across program changes, branches, and variants in continuous integration settings. TASTING can dramatically speed-up test suite executions by recursively composing hashes of all relevant syntactic elements into a semantic fingerprint of the test and its execution environment, so identical test executions can easily be detected and skipped. This is an important building block for variant-aware testing in the CADOS project.

Tobias got the Best Student Paper award for this work!

- ParPerOS: Parallel Persistency OS (DFG: LO 1719/8-1 and DI 2840/2-1)

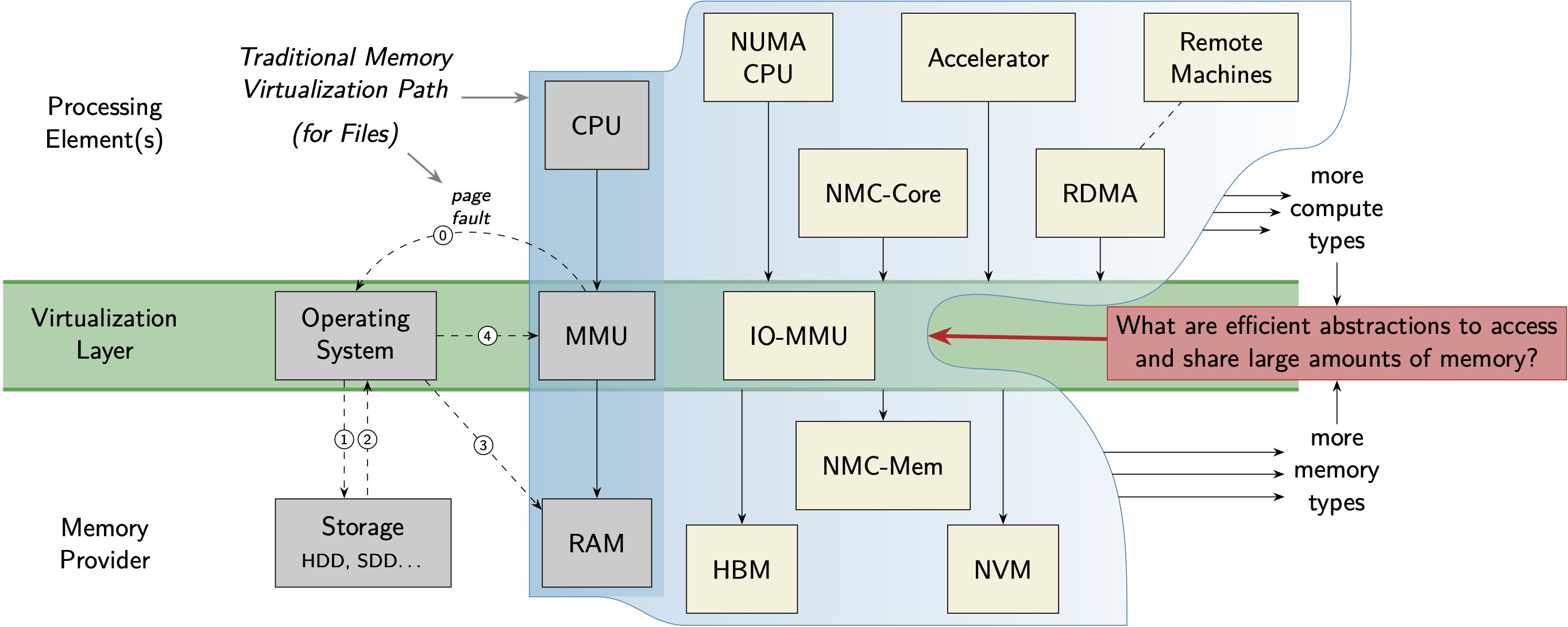

- In ParPerOS, we examine new abstractions for unified but efficient and optionally crash-consistent low-level memory management for data objects in heterogeneous memory systems that consist of volatile, persistent, distributed and other types of main memory.

Our former SRA member and current project partner in the ATLAS and ParPerOS projects, Christian Dietrich helds his inaugural lecture on New Directions for Managing Memory:

Abstract: Traditionally, memory is the scarce resource that operating systems virtualize for their users. However, current hardware trends, like ultra-fast NVMe SSDs and non-volatile RAM, force us to rethink operating system-mediated management. We no longer have to manage scarcity, but we have to swim in the new abundance without drowning. In his inaugural lecture, Christian Dietrich will present three ongoing research projects that center around the topic of memory management.

The event starts at 14:00 and can be followed by Zoom.

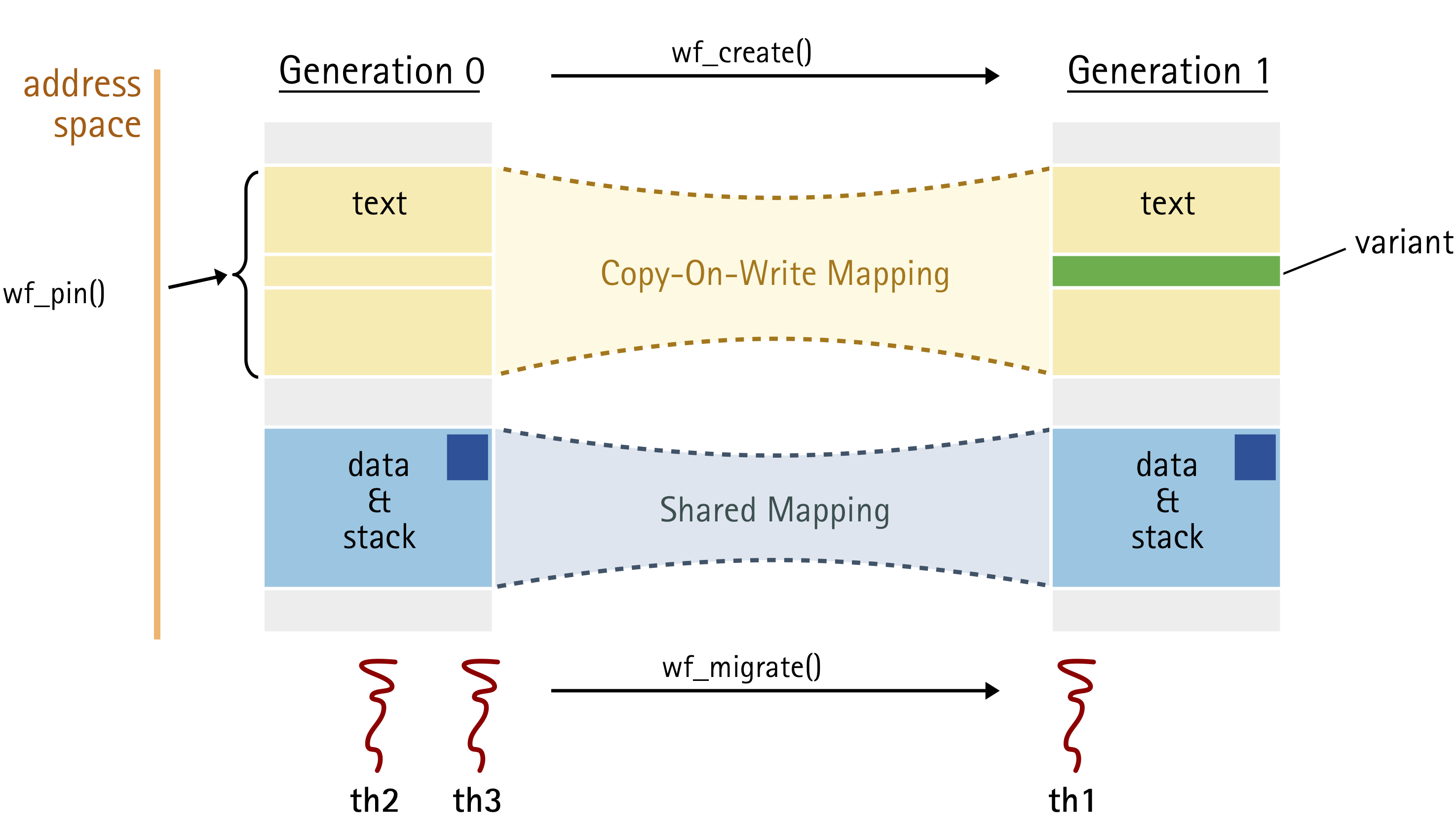

- ATLAS: Adaptable Thread-Level Address Spaces (DFG: LO 1719/7-1 and DI 2840/1-1)

- In the ATLAS project, we investigate dynamic specialization and containment by means of thread-level address-space variations.

Abstract: Computer-based automation in industrial appliances led to a growing number of logically dependent, but physically separated embedded control units per appliance. Many of those components are safety-critical systems, and require adherence to safety standards, which is inconsonant with the relentless demand for features in those appliances. Features lead to a growing amount of control units per appliance, and to a increasing complexity of the overall software stack, being unfavourable for safety certifications. Modern CPUs provide means to revise traditional separa- tion of concerns design primitives: the consolidation of systems, which yields new engineering challenges that concern the entire software and system stack.

Multi-core CPUs favour economic consolidation of formerly separated systems with one efficient single hardware unit. Nonetheless, the system architecture must provide means to guarantee the freedom from interference between domains of different criticality. System consolidation demands for architectural and engineering strategies to fulfil requirements (e.g., real-time or certifiability criteria) in safety-critical environments.

In parallel, there is an ongoing trend to substitute ordinary proprietary base platform software components by mature OSS variants for economic and engineering reasons. There are funda- mental differences of processual properties in development processes of OSS and proprietary software. OSS in safety-critical systems requires development process assessment techniques to build an evidence-based fundament for certification efforts that is based upon empirical software engineering methods.

In this thesis, I will approach from both sides: the software and system engineering perspective. In the first part of this thesis, I focus on the assessment of OSS components: I develop software engineering techniques that allow to quantify characteristics of distributed OSS development processes. I show that ex-post analyses of software development processes can be used to serve as a foundation for certification efforts, as it is required for safety-critical systems.

In the second part of this thesis, I present a system architecture based on OSS components that allows for consolidation of mixed-criticality systems on a single platform. Therefore, I exploit virtualisation extensions of modern CPUs to strictly isolate domains of different criticality. The proposed architecture shall eradicate any remaining hypervisor activity in order to preserve real- time capabilities of the hardware by design, while guaranteeing strict isolation across domains.

- CADOS: Configurability-Aware Development of Operating Systems (DFG: LO 1719/3-2)

- In the CADOS project, we investigate scalable methods and tools to deal with the implementation of variability across all implementation layers of modern system software.

After many fruitful years with dozen of papers, great lectures and a lot of fun together, Christian Dietrich leaves our group to start his Juniorprofessorship (W1-TT-W3) with a new operating system group at TUHH. We will continue our work together, nevertheless miss him a lot, and wholeheartedly congratulate Prof. Dr.-Ing. Christian Dietrich for this great step in his career!

Christian Dietrich receives an award for the best doctoral thesis in the field of operating systems. The award is granted annually by the SIG on Operating Systems of the German Computer Assiciation (GI Fachgruppe Betriebssysteme) solely on the base of scientific excellence. It includes a price money of 500 €. Congrats, Christian!

In his dissertation Interaction-Aware Analysis and Optimization of Real-Time Application and Operating System, Christian designs and implements a control-flow--sensitive whole-system view and analysis on the interactions within real-time systems.

With this approach, he can overcome many inefficiencies that arise from analyses that have an isolating focus on individual system components.

Furthermore, the interaction-aware methods keep close to the actual implementation, and therefore are able to consider the behavioral patterns of the finally deployed real-time computing system.

Florian Rommel presents our paper From Global to Local Quiescence: Wait-Free Code Patching of Multi-Threaded Processes at OSDI '20 – due to Corona by video.

In the paper, we present WfPatch, a wait-free approach to inject code changes into running multi-threaded programs. Instead of having to stop the world before applying a patch, WfPatch can gradually apply it to each thread individually at a local point of quiescence, while all other threads can make uninterrupted progress.

WfPatch is the first outcome of our novel concept on adaptable thread-level address spaces, which we are investigating in the ATLAS project.

Tobias Landsberg receives the award for best master thesis in the field of operating systems. The award is granted annually by the SIG on Operating Systems of the German Computer Assiciation (GI Fachgruppe Betriebssysteme) solely on the base of scientific excellence. It includes a price money of 500 €. Congrats, Tobias!

In his thesis Analyzing and Optimizing TLB-Induced Thread Migration Costs on Linux/ARM Tobias evaluates if it is feasable and benefitial to pre-warm the TLB (Translation Look-Aside Buffer) when a thread is migrated to another core. He analyzes existing ARM cores, presents and evaluates possible hardware extensions in gem5 and and provides a complete Linux integration for the system.

Abstract: Mechanical and electronic automation was a key component of the technological advances in the last two hundred years. With the use of special-purpose machines, manual labor was replaced by mechanical motion, leaving workers with the operation of these machines, before also this task was conquered by embedded control systems. With the advances of general-purpose computing, the development of these control systems shifted more and more from a problem-specific one to a one-size-fits-all mentality as the trade-off between per-instance overheads and development costs was in favor of flexible and reusable implementations. However, with a scaling factor of thousands, if not millions, of deployed devices, overheads and inefficiencies accumulate; calling for a higher degree of specialization.

For the area of real-time operating systems, which form the base layer for many of these computerized control systems, we deploy way more flexibility than what is actually required for the applications that run on top of it. Since only the solution, but not the problem, became less specific to the control problem at hand, we have the chance to cut away inefficiencies, improve on system-analyses results, and optimize the resource consumption. However, such a tailoring will only be favorable if it can be performed without much developer interaction and in an automated fashion. Here, real-time systems are a good starting point, since we already have to have a large degree of static knowledge in order to guarantee their timeliness. Until now, this static nature is not exploited to its full extent and optimization potentials are left unused.

The requirements of a system, with regard to the RTOS, manifest in the interactions between the application and the kernel. Threads request resources from the RTOS, which in return determines and enforces a scheduling order that will ensure the timely completion of all necessary computations. Since the RTOS runs only in the exception, its reaction to requests from the application (or from the environment) is its defining feature.

In this thesis, I will grasp these interactions, and thereby the required RTOS semantic, in a control-flow--sensitive fashion. Extracted automatically, this knowledge about the reciprocal influence allows me to fit the implementation of a system closer to its actual requirements. The result is a system that is not only in its usage a special-purpose system, but also in its implementation and in its provided guarantees.

In the development of my approach, it became clear that the focus on these interactions is not only highly fruitful for the optimization of a system, but also for its end-to-end analysis. Therefore, this thesis does not only provide methods to reduce the kernel-execution overhead and a system's memory consumption, but it also includes methods to calculate tighter response-time bounds and to give guarantees about the correct behavior of the kernel. All these contributions are enabled by my proposed interaction-aware methodology that takes the whole system, RTOS and application, into account.

With this thesis, I show that a control-flow--sensitive whole-system view on the interactions is feasible and highly rewarding. With this approach, we can overcome many inefficiencies that arise from analyses that have an isolating focus on individual system components. Furthermore, the interaction-aware methods keep close to the actual implementation, and therefore are able to consider the behavioral patterns of the finally deployed real-time computing system.

Abstract: In der heutigen Wissenschaft und Wirtschaft haben wir es oft mit Systemen zu tun, welche aus Problemen bestehen, die sehr komplex und nicht einfach zu lösen sind. Aufgrund der zunehmenden Komplexität und der teilweise fehlenden Informationen ist es bereits heutzutage nicht mehr möglich, solche Probleme – welche als Blackbox-Probleme klassifiziert werden – per Hand zu lösen. Um das Maximum oder Minimum zu finden, wird auf Optimierungsmethoden zurückgegriffen, die uns ermöglichen, eine optimale Lösung für das Problem zu suchen und ggf. zu finden. Stochastische Methoden haben die letzten Jahre gezeigt, dass sie sehr gut geeignet sind, solche Probleme zu lösen. Der Vorteil der Verwendung von stochastischen Methoden ist, dass sie nicht den Gradienten des zu optimierenden Problems verwenden, so dass sie sowohl bei großen als auch bei komplexen Optimierungsproblemen erfolgreich angewendet werden können. Diese Vielseitigkeit hat aber ihren Preis. Es gibt hauptsächlich drei wesentliche Aspekte, die die Effizienz der Lösung beeinträchtigen:

- Die realen Probleme werden immer größer und komplizierter oder sie müssen in sehr kurzer Zeit gelöst werden, was erhebliche Ressourcen in Zeit und Hardware erfordert.

- Optimierungsprobleme sind durch mehrere lokale Optima charakterisiert, die ein Verfahren zur Vermeidung einer zu frühen Konvergenz erfordern.

- Algorithmen erfordern einige problembedingte Anpassungen ihrer Verhaltensparameter, um bessere Ergebnisse zu erzielen.

Untersuchungen in dieser Arbeit haben gezeigt, dass die Anpassungen zu besse ren Ergebnissen führen. Durch die adaptive Natur des Frameworks, ist es in vielen Rechnerarchitekturen nutzbar und für viele Probleme anwendbar.

Prof. Dr.-Ing. habil. Daniel Lohmann gave his inaugural lecture at the Faculty of Electrical Engineering and Computer Science. In his presentation "Klein und sicher – Automatisch anpassbare Systemsoftware für eingebettete Spezialzweckanwendungen", Prof. Lohmann provided an entertaining introduction into our research activities and the case for highly tailorable system software.

Björn Fiedler presents our paper Levels of Specialization in Real-Time Operating Systems was at the 14th Workshop on Operating System Platforms for Embedded Real-Time Applications (OSPERT '18), in Barcelona. In the paper we describe a taxonomy for the specialization of system software towards a specific application and provide showcases of the achievable benefits. We got an Best Paper Award for this work.

- AHA: Automated Hardware Abstraction in Operating-System Engineering (DFG: LO 1719/4-1)

- Goal of AHA is to improve nonfunctional properties of system software by a very deep, but fully automated specialization of the application-hardware bridge represented by the operating system. We investigate, how alternative implementations that are mapped more directly to hardware features, can be generated from a concrete application and their actual interactions with the operating system.

Die Kriterien für die Auszeichnung sind eine herausragende Lehrleistung über die Dauer von wenigstens zwei Studienjahren an einer Universität in Bayern, eine Beteiligung der Studierenden an der Auswahl sowie der Vorschlag der jeweiligen Universität. Über alle Maßnahmen zur Sicherung der Qualität der Lehre, die von den Hochschulen praktiziert werden, spielen das persönliche Engagement und die pädagogisch-didaktischen Kompetenzen des Lehrenden eine große Rolle.